DoH is a hot discussion point

in both the privacy and DNS people. There are many criticisms, including

encryption support of the clients or still trusting a third party. There is an

excellent talk from Bert Hubert

on this topic.

In this post, we will learn how to set up our own personal DoH server. I am not

posting any tips on the IPTABLES rules, you should be able to add those based

on what all services you run on the server.

We will use unbound as the

recursive DNS server in our setup. In the server, we can easily install it via

the OS package management (apt/dnf).

Getting the root name servers' details

We should get a fresh copy of the root name servers' details, and then you can

have a cron job every six months to get a fresh copy.

curl --output /etc/unbound/root.hints https://www.internic.net/domain/named.cache

Setting up unbound

I am using the following configuration

# Unbound configuration file for Debian.

#

# See the unbound.conf(5) man page.

#

# See /usr/share/doc/unbound/examples/unbound.conf for a commented

# reference config file.

#

# The following line includes additional configuration files from the

# /etc/unbound/unbound.conf.d directory.

include: "/etc/unbound/unbound.conf.d/*.conf"

server:

root-hints: "/etc/unbound/root.hints"

interface: 127.0.0.1

access-control: 0.0.0.0/0 allow

use-syslog: yes

Then you can get the configuration checked via the unbound-checkconf command.

# unbound-checkconf

unbound-checkconf: no errors in /etc/unbound/unbound.conf

systemctl enable unbound

systemctl start unbound

Setup Nginx along with certbot

Setup Nginx and use the certbot tool to get the SSL certificate. Here is the

configuration I am using, the main point to notice the upstream section.

upstream dns-backend {

server 127.0.0.1:8053;

keepalive 30;

}

server {

listen 80;

listen [::]:80;

location /.well-known/acme-challenge {

alias /var/www/leftover;

}

root /var/www/html;

server_name yourdomain;

return 301 https://$host;

}

server {

listen 443 ssl http2;

# if you wish, you can use the below line for listen instead

# which enables HTTP/2

# requires nginx version >= 1.9.5

# listen 443 ssl http2;

server_name yourdomain;

index index.html;

ssl_certificate /etc/letsencrypt/live/yourdomain/fullchain.pem;

ssl_certificate_key /etc/letsencrypt/live/yourdomain/privkey.pem;

# Turn on OCSP stapling as recommended at

# https://community.letsencrypt.org/t/integration-guide/13123

# requires nginx version >= 1.3.7

ssl_stapling on;

ssl_stapling_verify on;

# modern configuration. tweak to your needs.

ssl_protocols TLSv1.2 TLSv1.3;

ssl_ciphers 'ECDHE-ECDSA-AES256-GCM-SHA384:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-CHACHA20-POLY1305:ECDHE-RSA-CHACHA20-POLY1305:ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES256-SHA384:ECDHE-RSA-AES256-SHA384:ECDHE-ECDSA-AES128-SHA256:ECDHE-RSA-AES128-SHA256';

ssl_prefer_server_ciphers on;

# Uncomment this line only after testing in browsers,

# as it commits you to continuing to serve your site over HTTPS

# in future

# add_header Strict-Transport-Security "max-age=31536000";

rewrite ^(.*).php https://www.youtube.com/watch?v=dQw4w9WgXcQ last;

# maintain the .well-known directory alias for renewals

location /.well-known {

alias /var/www/yourdomain/.well-known;

}

location / {

root /var/www/html;

index index.html;

}

location /dns-query {

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header Host $http_host;

proxy_set_header X-NginX-Proxy true;

proxy_set_header Connection "";

proxy_http_version 1.1;

proxy_set_header Upgrade $http_upgrade;

proxy_redirect off;

proxy_set_header X-Forwarded-Proto $scheme;

proxy_read_timeout 86400;

proxy_pass http://dns-backend/dns-query;

}

}

Then start the Nginx server.

Setting up the DoH server

You will have to setup golang as we will have to compile the project. First

step is always the git clone :)

git clone https://github.com/m13253/dns-over-https.git

cd dns-over-https

make

make install

As next step we will modify the configuration file of the dns-over-https tool

at /etc/dns-over-https/doh-server.conf. Now, there are many different

configuration options available, I am using only a small part of it. Check the

github

repo

for an uptodate commented configuration example.

listen = [

"127.0.0.1:8053",

"[::1]:8053"

]

local_addr = ""

cert = ""

key = ""

path = "/dns-query"

upstream = [

"udp:127.0.0.1:53"

]

timeout = 10

tries = 3

verbose = false

log_guessed_client_ip = false

We are asking the tool to talk to the unbound running on the same server.

Next, we can start and enable the service. Remember to check the logs for any

errors due to typos.

systemctl restart doh-server

systemctl enable doh-server

Testing the setup

You can test the setup by making a call using curl command and using Python's

json module to give you a readable output.

curl -s "https://yourdomain/dns-query?name=dgplug.org.org&type=A" | python3 -m json.tool

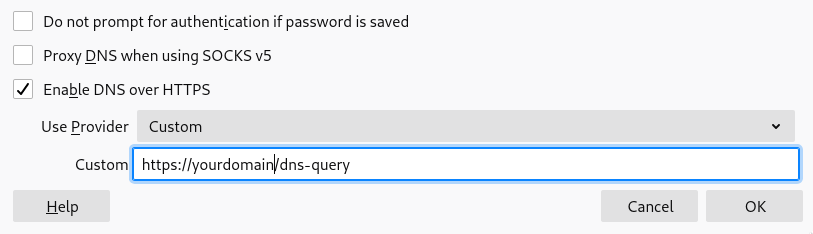

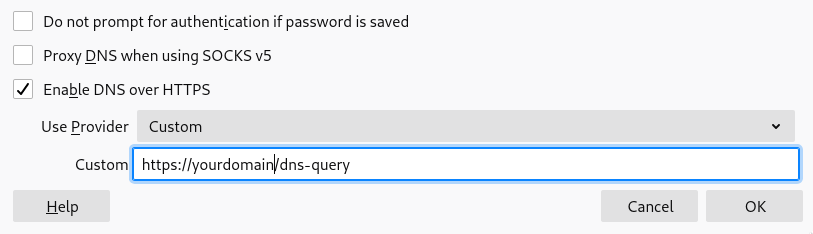

You can now use the server in the General -> Network Settings section of Firefox.