Defending against side channel attacks via dependencies

Yesterday Alex Birsan posted a blog explaining how he made a supply chain attack on various companies via dependencies. I was waiting for this blog from last August when we noticed the mentioned packages on PyPI (and removed). I reached out to Alex to figure out more about the packages, and he said he will write a blog post.

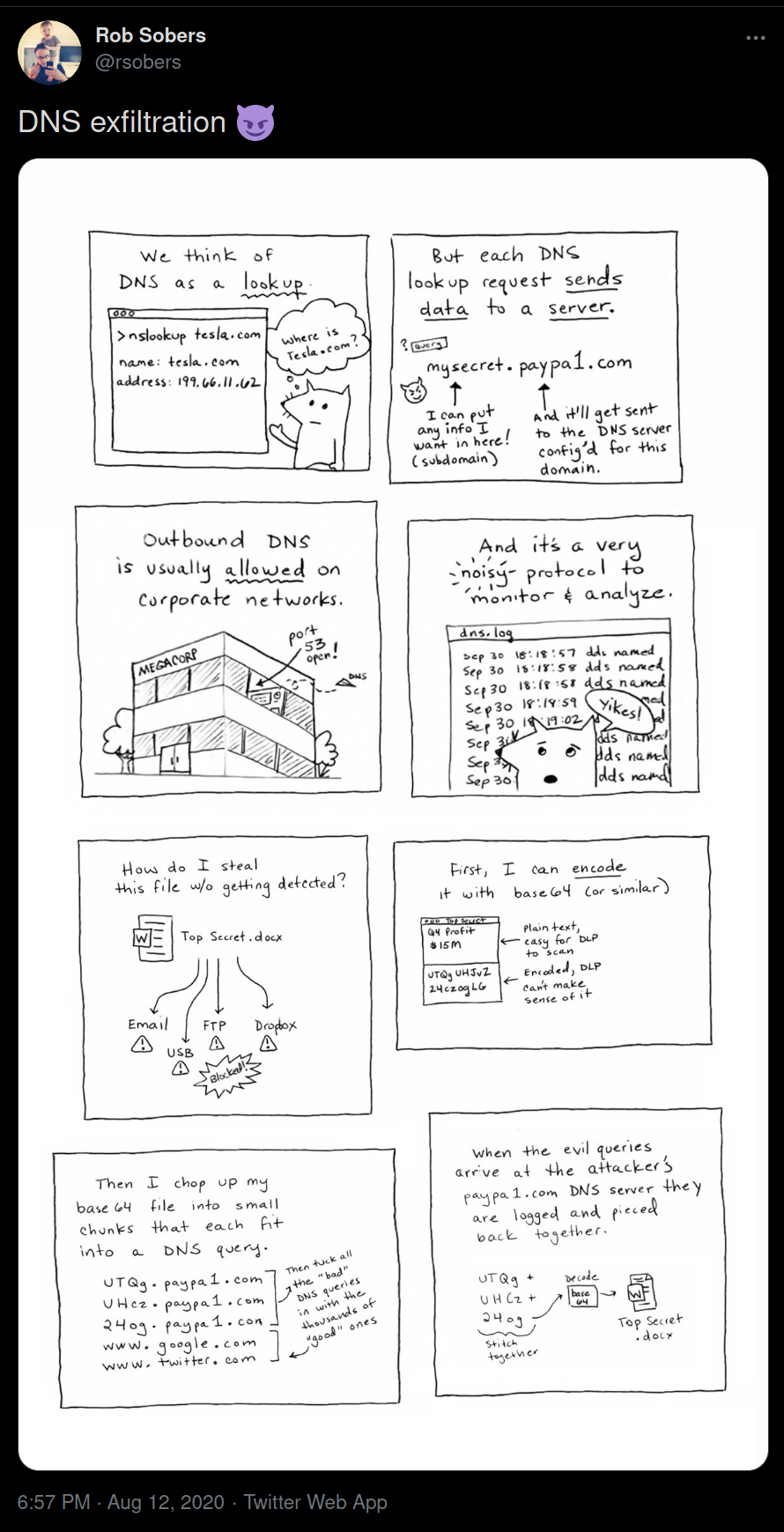

This is the same time when @rsobers also tweeted about how any similar attack works via DNS.

At SecureDrop project, we spend a lot of time figuring out a way to defend against similar attacks. My PyCon US 2019 talk explained the full process. In simple words, we are doing the following:

- Verify the source of every python dependency before adding/updating them. This is a manual step done by human eyes.

- Build wheels from the verified source and store them into a package repository along with OpenPGP signed sha256sums.

- Before building the Debian package, make sure to verify the OpenPGP signature, and also install only from the known package repository using verified (again via OpenPGP signature) wheel hashes (from the wheels we built ourselves).

Now, in the last few months, we modified these steps, and made it even easier.

Now, all of our wheels are build from same known sources, and all of the wheels

are then built as reproducible and stored in git (via LFS). The final Debian

packages are again reproducible. Along with this and the above mentioned

OpenPGP signature + package sha256sums verification via pip. We also pass

--no-index

the argument to pip now, to make sure that all the dependencies are getting

picked up from the local directory.

Oh, and I also forgot to mention that all of the project source tarballs used in SecureDrop workstation package building are also reproducible. If you are interested in using the same in your Python project (or actually for any project's source tarball), feel free to have a look at this function.

There is also diff-review mailing list (very low volume), where we post a signed message of any source package update we review.