Startup/execution time for a specific command line tool

Generally I don’t have to bother about the startup time of any command line tool. For a human eye and normal day to day usage, if a command takes half a second to finish the job, it is not much of a problem. But, the story changes the moment we talk about a command which we have to run multiple times. What about about a command which have to execute multiple times every minute? This is the time when the startup and execution time matters.

A few weeks ago I was looking at a Python script which was executing as part of Nagios run, it was doing an API call to a remote server with JSON data coming in as command line arguments. Now, to make it scale more the first thought was to move the actual API call to a different process and get the original script to load things into a Redis queue. But, the other issue was the startup time for the Python script, having something with lesser startup time would be more help in this case, where nagios may execute the script/command over a few hundred times in every minute.

So, first I rewrote the code in Rust, and that made things multiple times faster. But, just for fun I wanted to see if writing it in golang will help or not. And I am kind of surprised to see the startup/execution time difference. I am using hyperfine for benchmark.

Python script

Time (mean ± σ): 195.5 ms ± 11.3 ms [User: 173.6 ms, System: 19.7 ms]

Range (min … max): 184.5 ms … 228.8 ms 12 runs

Rust code

Time (mean ± σ): 31.1 ms ± 8.4 ms [User: 27.3 ms, System: 3.0 ms]

Range (min … max): 24.6 ms … 79.0 ms 37 runs

View the code.

Golang code

Time (mean ± σ): 3.2 ms ± 1.6 ms [User: 1.0 ms, System: 1.7 ms]

Range (min … max): 2.6 ms … 19.6 ms 140 runs

The code.

For now, we will go with the golang based code to do the work. But, if someone can explain the different ways Rust/Golang code starts up, that would be helpful to learn why such a speed difference.

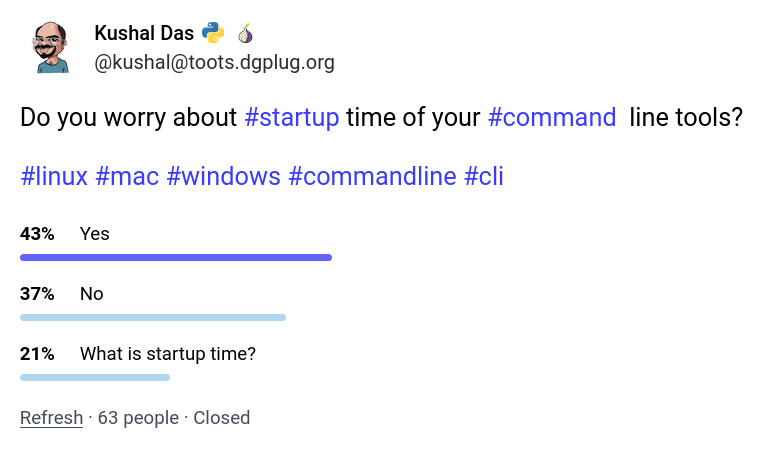

Oh, here is the result of a quick poll on Fediverse about startup time.

Update

I received a PR for the Rust code

from, which removes the async POST requests, and made the whole thing even

simpler and faster (30x).

Time (mean ± σ): 1.0 ms ± 0.6 ms [User: 0.5 ms, System: 0.3 ms]

Range (min … max): 0.5 ms … 4.3 ms 489 runs