Building wheels and Debian packages for SecureDrop on Qubes OS

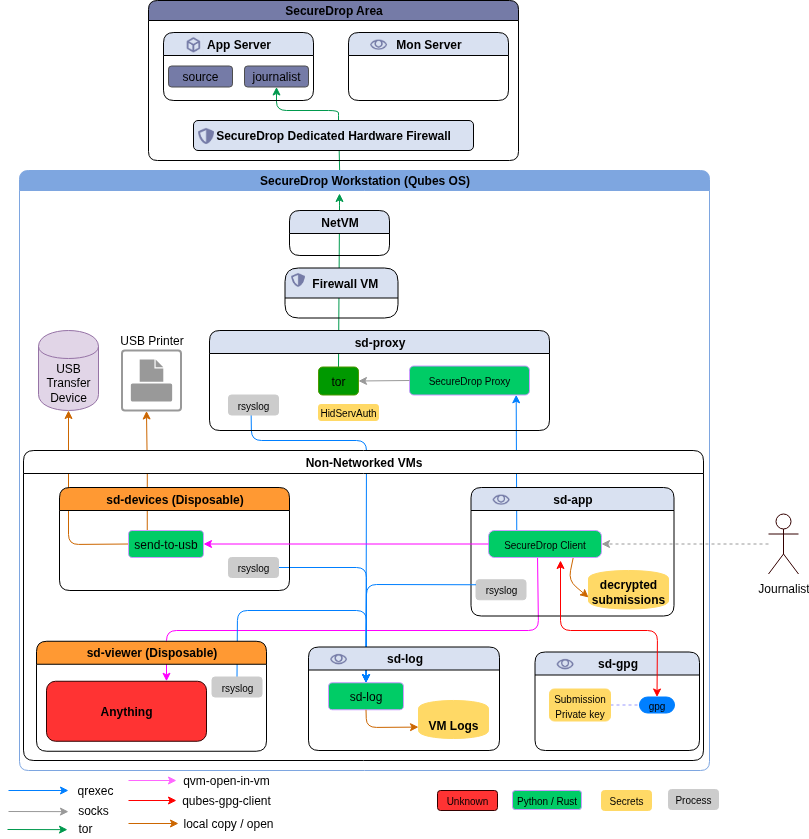

For the last couple of months, the SecureDrop team is working on a new set of applications + system for the journalists, which are based on Qubes OS, and desktop application written particularly for Qubes. A major portion of the work is on the Qubes OS part, where we are setting up the right templateVMs and AppVMs on top of those templateVMs, setting up the qrexec services and right configuration to allow/deny services as required.

The other major work was to develop a proxy service (on top of Qubes qrexec service) which will allow our desktop application (written in PyQt) to talk to a SecureDrop server. This part finally gets into two different Debian packages.

- The securedrop-proxy package: which contains only the proxy tool

- The securedrop-client: which contains the Python SDK (to talk to the server using proxy) and desktop client tool

The way to build SecureDrop server packages

The legacy way of building SecureDrop server side has many steps and also installs wheels into the main Python site-packages. Which is something we plan to remove in future. While discussing about this during PyCon this year, Donald Stufft suggested to use dh-virtualenv. It allows to package a virtualenv for the application along with the actual application code into a Debian pacakge.

The new way of building Debian packages for the SecureDrop on Qubes OS

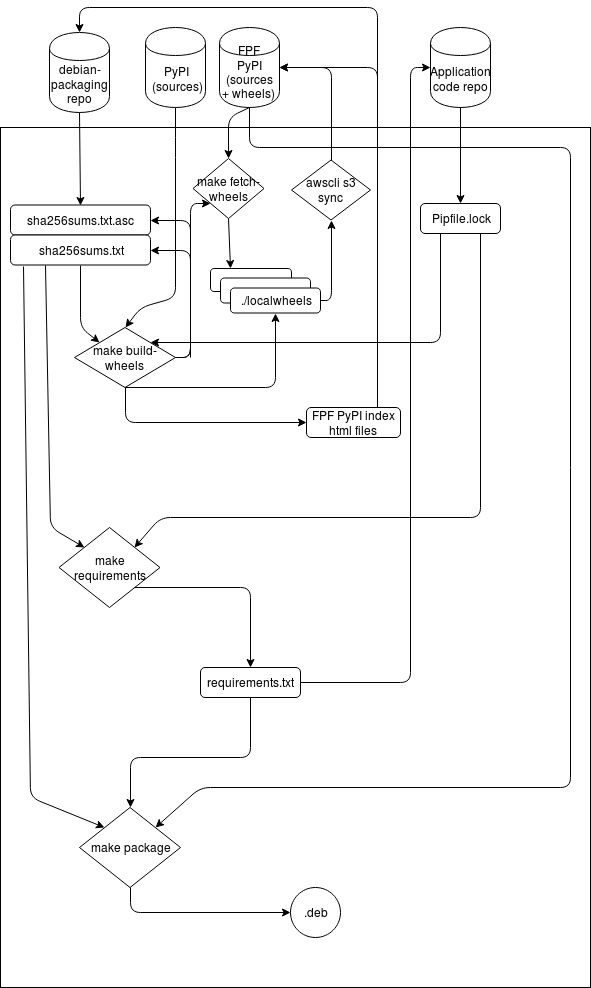

Creating requirements.txt file for the projects

We use pipenv for the development of the projects. pipenv lock -r can create

a requirements.txt, but, it does not content any sha256sums. We also wanted to

make sure that doing these steps become much easier. We have added a makefile

target

in our new packaging repo, which will first create the standard

requirements.txt and then it will try to find the corresponding binary wheel

sha256sums from a list of

wheels+sha256sums,

and before anything else, it

verifies

the list (signed with developers' gpg keys).

PKG_DIR=~/code/securedrop-proxy make requirements

If it finds any missing wheels (say new dependency or updated package version),

it informs the developer, the developer then can use another makefile target

to build the new wheels, the new wheels+sources do get synced to our simple

index hosted on s3. The hashes of the wheels+sources also get signed and

committed into the repository. Then, the developer retries to create the

requirements.txt for the project.

Building the package

We also have makefile targets to build the Debian package.

It

actually creates a directory structure (only in parts) like rpmbuild does in home directory,

and then copies over the source tarball, untars, copies the debian directory

from the packaging repository, and then reverifies each hashes in the project

requirements file with the current signed (and also verified) list of hashes. If

everything looks good, then it goes to build the final Debian package. This happens by the

following environment variable exported in the above mention script.

DH_PIP_EXTRA_ARGS="--no-cache-dir --require-hashes"

Our debian/rules files make sure that we use our own packaging index for building the Debian package.

#!/usr/bin/make -f

%:

dh $@ --with python-virtualenv --python /usr/bin/python3.5 --setuptools --index-url https://dev-bin.ops.securedrop.org/simple

For example, the following command will build the package securedrop-proxy version 0.0.1.

PKG_PATH=~/code/securedrop-proxy/dist/securedrop-proxy-0.0.1.tar.gz PKG_VERSION=0.0.1 make securedrop-proxy

The following image describes the whole process.

We would love to get your feedback and any suggestions to improve the whole process. Feel free to comment in this post, or by creating issues in the corresponding Github project.