Looking back at the history of dgplug and my journey

During a session of the summer training this year, someone asked about the history of DGPLUG and how I started contributing to it. The story of the dpglug has an even longer back-story about my history with Linux. I'll start there, and then continue with the story of dgplug.

Seeing Linux for the first time

During my class 11-12, I used to spend a lot of time in the hostels of the Regional Engineering College, Durgapur, (or as we call it, REC, Durgapur.) This institute is now known as NIT, Duragpur. I got to lay my hands on and use a computer (mostly to play games) in my uncle’s hostel room. All the machines I saw there, were Windows only.

My Join Entrance Examination(JEE) center was the REC (year: 2001). That was a known & familiar environment for me. During breaks on day 1, I came back to hall 2 (my uncle’s room) for lunch. The room was unusually full; I think more than 7 people were looking at the computer with very anxious faces. One guy was doing something on the computer. My food was kept in the corner, and someone told me to eat quietly. I could not resist, and asked what was going on.

We’re installing Linux in the computer, and this is a very critical phase.

If the mouse works, then it will just work, or else we will not be able to use it in the system at all, someone replied

I had to ask again, What is this Linux? “Another Operating System” came the reply. I knew almost everyone in the room, I knew that they used computers daily. I also saw a few of them also writing programs to solve their chemical lab problems (my uncle was in Chemical Engineering department). There were people from the computer science department too. The thing stuck in my head, going back to the next examination, was that one guy, who knew something which others did not. It stuck deeper, than the actual exam in hand, and I kept thinking about it all day, that day. Later after the exam, I actually got some time to sit in front of the Linux computer, and then I tried to play with it, trying things and clicking around on the screen. Everything was so different than I used to see on my computer screen. That was it, my mind was set; I was going to learn Linux, since not many know about it. I will also be able to help others as required, just like my uncle’s friend.

Getting my own computer

After the results of the JEE were announced, I decided to join the Dr. B. C. Roy Engineering College, Durgapur. It was a private college, opened a year back. On 15th August, I also got my first computer, a Pentium III, with 128MB of RAM, and a 40GB hard drive. I also managed to convince my parents to get a mechanical keyboard somehow, (which was costly and unusual at the time) with this setup. I also got Linux (RHL 7.x IIRC) installed on that computer along with Windows 98. Once it got home, I kept clicking on everything in Linux, ran the box ragged and tried to find all that I could do with Linux.

After a few days, I had some trouble with Windows and had to reinstall it, and when I rebooted, I could not boot to Linux any more. The option had disappeared. I freaked out at first, but guessed that it had something to do with my Windows reinstall. As I had my Linux CDs with me, I went ahead and tried to install it again. Installing and reinstalling the operating systems over and over, gave me the idea that I will had to install Windows first, and only then, should I install Linux. Otherwise, we can not boot into Linux.

Introduction to the Command Line

I knew a few Windows commands by that time. Someone in REC pointed me to a book written by Sumitabha Das (I still have a copy at home, in my village). I started reading from there, and learning commands one by one.

Becoming the Linux Expert in college

This is around the same time when people started recognizing me as a Linux Expert; at least in my college.

Of course I knew how to install Linux, but the two major things that helped get that tag, were

- the mount command, I knew how to mount Windows partitions in Linux

- xmms-mp3 rpm package. I had a copy, and I could install it on anyone’s computer.

The same song, on the same hardware, but playing in XMMS always used to give much better audio quality than Windows ever did. Just knowing those two commands gave me a lot of advantage over my peers in that remote college (we never had Internet connection in the college, IIRC).

The Unix Lab & Introduction to computer class

We were introduced to computers in our first semester in some special class. Though many of my classmates saw a computer for the first time in their life, we were tasked to practice many (DOS) commands in the same day. I spent most of my time, helping others learn about the hardware and how to use it.

In our college hostel, we had a few really young professors who also stayed with us. Somehow I started talking a lot with them, and tried to learn as many things as I could. One of them mentioned something about a Unix lab in the College which we were supposed to use in the coming days. I went back to the college the very next day and managed to find the lab; the in-charge (same person who told me about it) allowed me to get in, and use the setup (there were 20 computers).

Our batch started using the lab, only for 2-3 days at the most. During the first day in the lab, I found a command to send out emails to the other users. I came back during some off hours, and wrote a long mail to one of my classmates (not going to talk about the details of the mail) and sent it out.

As we stopped using that lab, I was sure no one had read that mail. Except for one day, the lab in-charge asked me how my email writing was going on. I was stunned, how did he find out about the email? I was all splutteringly, tongue tied! Later at night, he explained to me, the idea of sysadmins, and all that a superuser can do in a Linux/Unix environment. I started thinking about privacy in the electronic world from that night itself :)

Learning from friends

The only other people who were excited about Linux, were two people from same batch in REC. Bipendra Shrestha, and Jitu. I used to spend a lot of time in their hostel and learned so many things from them.

Internet access and the start of dgplug

In 2004, I managed to get more regular access to the Internet (by saving up a bit more money to visit Internet cafes regularly). My weekly allowance was Rs.100, and regular one hour Internet access was around Rs.30-50.

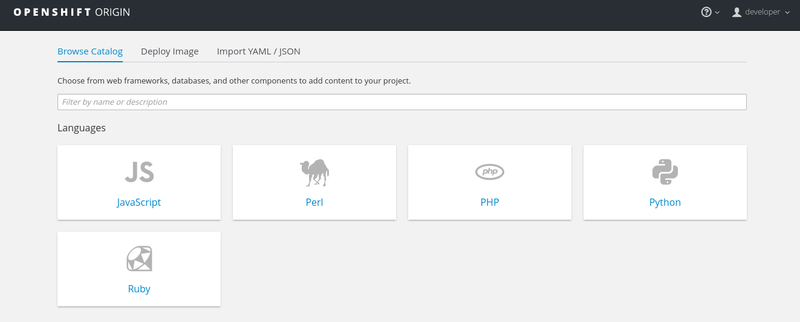

While reading about Linux, I found the term LUG, or Linux User groups. As I was the only regular Linux user in college, I knew that I never had much chance to learn more on my own there, and that somehow I will have to find more people like me and learn together. Also around the same time, I started learning about words like upstream, contribution, Free Software, & FSF. I managed to contact Sankarshan Mukhopadhyay, who sent me a copy of Ankur Bangla, a Linux running in my mother tongue, Bengali. I also came to know about all the ilug chapters in India. That inspired me. Having our own LUG in Durgapur was my next goal. Soumya Kanti Chakrabarty was the first person, I convinced, to join with me to form this group.

The first website came up on Geocities (fun times), and we also had our yahoo group. Later in 2005, we managed to register our domain name; the money came in as a donation from my uncle (who by this time was doing his Ph.D. in IIT Kanpur).

I moved to Bangalore in July 2005, and Soumya was running the local meetings. After I started using IRC regularly, we managed to have our own IRC channel, and we slowly moved most of our discussion over to IRC only. I attended FOSS.IN in December 2005. I think I should write a complete post about that event, and how it changed my life altogether.

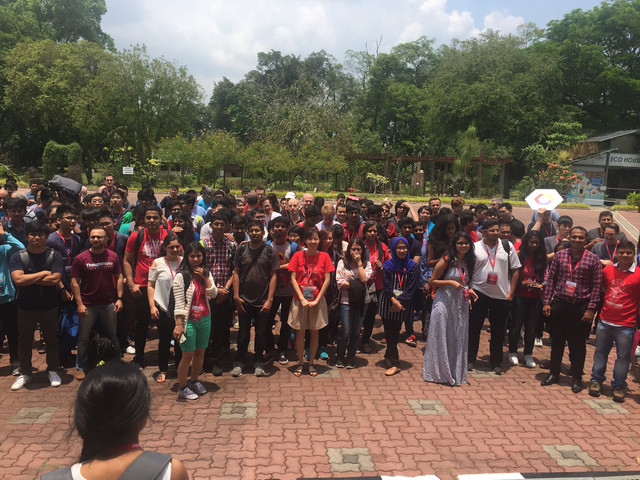

Physical meetings in 2006-2007

A day with Fedora on 4th April 2006 was the first big event for us. Sayamindu Dasgupta, Indranil Dasgupta, and Somyadip Modak came down for this event to Durgapur. This is the same time when we started the Bijra project, where we helped the school to have a Linux (LTSP) based setup, completely in Bengali. This was the first big project we took on as a group. This also gave us some media coverage back then. This led to the bigger meetup during 2007, when NRCFOSS members including lawgon, and Rahul Sundaram came down to Durgapur.

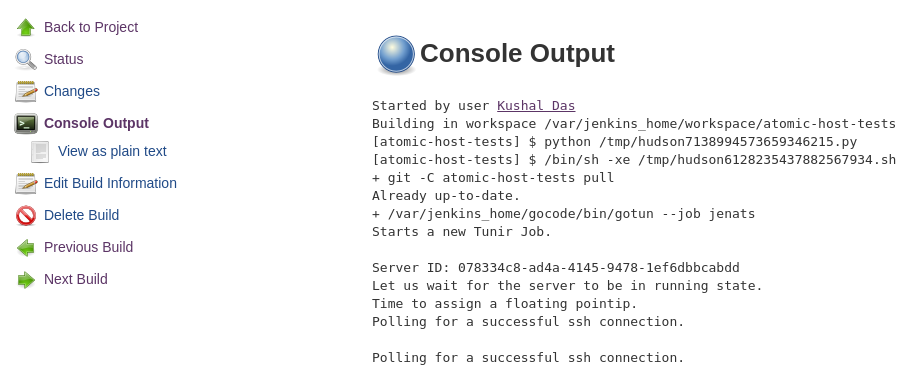

dgplug summer training 2008

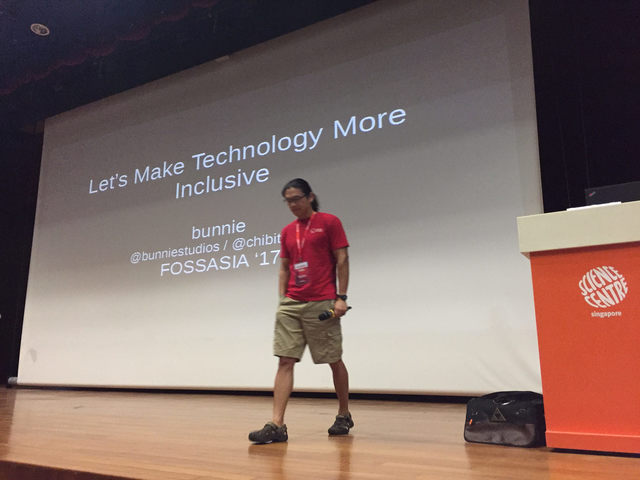

In 2008, I pitched the idea of having a summer training over IRC, following the same rules of meetings as Fedora marketing on IRC. Shakthi Kannan also glommed onto the idea, and that started a new chapter in the history of dgplug.

Becoming the active contributor community

I knew many people who are better than me when it comes to brain power, but generally, there was no one to push the idea of always learning new things to them. I guess the motto of dgplug, “Learn and teach others” helped us go above this obstacle, and build a community of friends who are always willing to help.

শেখ এবং শেখাও (Learn & Teach others).

Our people are from different backgrounds, from various countries, but the idea of Freedom and Sharing binds us together in the group known as dgplug. Back in 2015 at PyCon India, we had a meeting of all the Python groups in India. After listening to the problems of all the other groups, I suddenly realized that we had none of those problems. We have no travel issues, no problems getting speakers, and no problem getting new people to join in. Just being on the Internet, helps a lot. Also, people in the group have strong opinions, this means healthy but long discussions sometimes :)

Now, you may have noticed that I did not call the group a GNU/Linux users group. Unfortunately, by the time I learned about the Free Software movement, and its history, it was too late to change the name. This year in the summer training, I will take a more in-depth session about the history of hacker ethics, and Free Software movement. and I know few other people will join in.

The future

I wish that DGPLUG continues to grow along with the members. The group does not limit itself only to be about software, or technology. Most of the regular members met each other in conferences, and we keep meeting every year in PyCon India, and PyCon Pune. We should be able to help other to learn and use the same freedom (be it in technology or in other walks of life) we have. The IRC channel should continue to be the happy place it always has been; where we all meet every day, and have fun together.