Fedora Cloud WG during last week of 2016-02

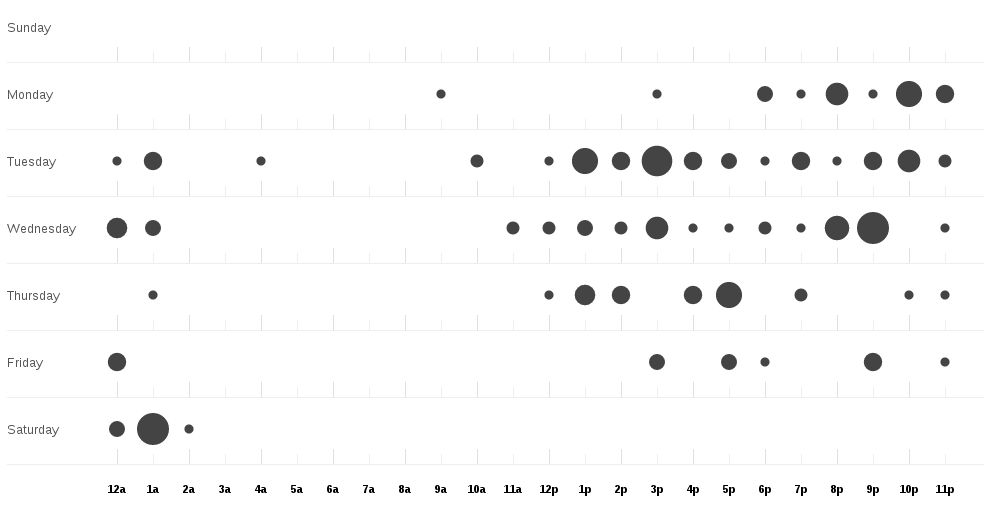

Fedora Cloud Working Group meets every Wednesday at 17:00UTC on #fedora-meeting-1 IRC channel on Freenode server. This week we had 15 people attending the meeting, which is in the regular range of the meeting attendees. The points need to be discussed in the meeting are generally being tracked on the fedorahosted trac as trac tickets with a special keyword meeting. This basically means if you want something to be discussed in the next cloud meeting, add a ticket there with the meeting keyword.

After the initial roll call, and discussions related to the action items from the last week, we moved into the tickets for this week's meeting. I had continued my action item on investigating adding a CDROM device to the embedded Vagrantfile in the Vagrant images we generate. But as it seems that is hardcoded inside of Imagefactory, I am feeling less motivated to add any such thing there.

Then we moved to discuss the FAD proposal, which still needs a lot of work. Next major discussion was related to the Fedora 24 changes from the Cloud WG. I have updated the ticket with the status of each change. It was also decided that nzwulfin will update the cloud list with more information related to Atomic Storage Clients change.

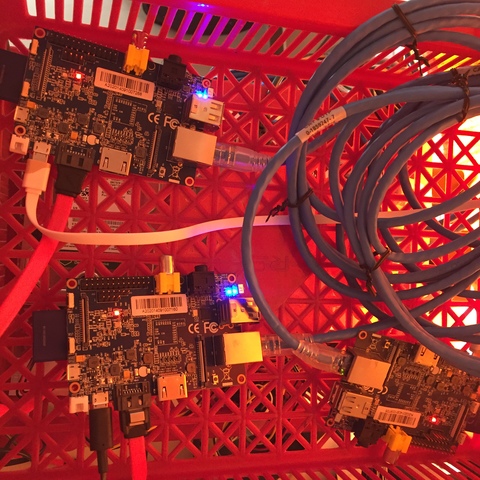

Next big discussion was related to Container "Packager" Guildelines and Naming Conventions. One of the major upcoming change is about being able to build layered images on Fedora Infrastructure as we build the RPM(s) in the current days. Adam Miller wrote the initial version of the documents for the packagers of those layered images. I have commented my open questions in the trac ticket, others also started doing so. Please take your time, and go through both the docs pointed in that ticket. As containers are taking a major part of all the cloud discussions, this will be a very valuable guide for the future container packagers.

I still have some open work left from this week. During Open Floor Steve Gordon brought up the issues Magnum developers are facing while using Fedora Atomic images. I will be digging more into that this week. The log of the meeting can be found here. Feel free to join in the #fedora-cloud channel if you have any open queries.